8 Types of Content Insta's Moderation Wrongly Censors (& why we need more nuanced sexuality algorithms)

Social media algorithms aren’t just sexist, racist, homophobic, fatphobic, etc.. they’re also just plain dumb. We need smarter algorithms STAT - the proof is in these screenshots.

Hey fam,

I’m back after a looong hiatus from this newsletter - quick apologies to anyone who subscribed in recent months with high expectations.. honestly, I’m with ya, I expected more from me too but when you’re working two full-time jobs on top of trying to stay sane during a global pandemic, passion projects sometimes need to be put on hold.

I did, however, find the time to make this:

I also drafted these (unfinished, unpublished newsletters from the last 5 months):

So if you’re curious about my thoughts on any of these topics, feel free to hit me up cuz I’ve got em - FYI this would literally make my day. I appreciate you all more than you can imagine <3

Some big news before we dive in: THE LIPS APP LAUNCHED💋🥳 and we gained 10K users in the first month… (pretty unheard of in startup land)… and finally have our lil digital paradise of art, love, and empowerment to play in. Now that we’ve got users, it’s time to grow and build out the platform (esp. the marketplace where our artists & brands can sell). But first, we need capital (building & maintaining an app is costly). Soooo rather than sell out to greedy Silicon Valley investors, we decided to do something different - to sell ownership of our company to the community & more values-aligned investors in what is called equity crowdfunding.

Here is the link to our investment campaign 💜- we legit JUST launched it this week and are inviting our friends to invest first with the best terms (most bang for your buck) before launching publicly in 7 days. If you believe in what we’re building, join us and become an investor😍 (minimum is $100). I’m going to cover this again in detail in my next newsletter - why it’s better for many under-estimated entrepreneurs, more ethical than VC, better for communities, etc. - but reach out if you have any questions and also pleeaasssee help me out by sharing this link around with anyone you know who loves startups, maybe is passionate about feminism, is queer, an artist, your dad, ANYONE.

Thanks y’all. Lots of love,

Val

8 Types of Content Insta's Moderation Wrongly Censors (& why we need more nuanced sexuality algorithms)

The Instagram moderation algorithm is as un-woke as the cis het white dudes who built it.

As many of you probably know, Instagram operates under a set of “Community Guidelines” - kind of like a rule book - that delineate the types of content allowed and not allowed to be posted onto Instagram by its users. These guidelines can be found here if you’re curious and want to read them, but basically they say they strive to “create a safe and open environment for everyone” and therefore ban stuff like hate speech; violence; attacks on race, ethnicity, national origin, sex, gender, gender identity, sexual orientation, religious affiliation, disabilities, or diseases; and nudity.

In theory, this is mostly fine - although nudity is nothing like hate speech or violence. Instagram is a private company that has the right to decide on whatever rules they want or believe will “foster a positive, diverse community.” However, where we should have a real problem with Instagram is in how they go about enforcing those rules. If Community Guidelines are the laws, the moderation algorithm is the law enforcement. And if we’ve learned anything over the past year, we’ve probably realized that many law enforcement bodies: 1) are not representative of community diversity (U.S. police are 84% male, 67% white); 2) have implicit biases and stereotypes that affect their work; 3) surveil and treat people differently based on who they are. Instagram’s moderation algorithm is similar.

So, what I aim to demonstrate below is that content from these 8 categories is commonly wrongly identified by the law enforcing algorithms as in violation of Community Guidelines when it’s really, technically - according to the laws - not. Therefore, I highlight how the biases in the moderation algorithm mean that it’s not even doing its job well because it’s too dumb - especially when it comes to bodies and sexuality - and we need to invest (literally😉) in better, smarter algorithms to manage our digital experiences.

Just in case it wasn’t already obvious, Lips is building this smarter algorithm with our patent-pending machine learning pre-set hashtag system. The hashtags we use on Lips come from the community ourselves - they are the terms WE use to label our complex identities and sexualities - and therefore enable Lips to label and sort content with more nuance than the mainstream platforms are capable of. Over time, this algorithm will become smarter and one day we’ll be able to sell it (for a lot of money..) to companies who want the smarter algorithm but didn’t have a community like ours to help them develop it for themselves - companies like Google Search, IBM Watson, Amazon, and Etsy.

One day, thanks to Lips, the internet’s algorithms will no longer wrongly identify these 8 types of content below as “inappropriate,” won’t censor us inadvertently, and won’t reproduce the detrimental harms our community faces when we’re unable to express our true selves…

1. Sex Workers, Sex Worker Activism

Sex workers are some of Instagram’s most careful and law-abiding users because - like all other influencers - their careers depend on their audiences and customer base. Unfortunately, many, many sex workers deal with wrongful censorship online even while being extremely careful not to disobey community guidelines such as by god-forbid slipping a “female-presenting nipple” (or whatever the hell that means).

They get their posts taken down, account visibility restricted (shadow-banned), and most devastatingly their entire accounts (with thousands and thousands of followers) are sometimes deactivated or deleted without warning.

When the accounts of individual sex workers are targeted but male magazines like Playboy and Sports Illustrated Swimsuit are able to post photographs with the same amount of exposed body, it demonstrates that the platform accepts female bodies when they are shot through the lens of a male photographer and for the pleasure of a male audience but not when they are shot for the purposes of women running their businesses, making money, and doing what they want with their bodies for themselves.

This petition was started by @bloggeronapole to raise awareness and combat this unfair censorship. Consider signing and sharing! Here’s a quote:

“Instagram have recently stated that they do not allow or facilitate sexual encounters between adults on the platform. However, a lot of the nudity and sexual references Instagram ban - e.g. strip club shows, erotic dances, references to arousal or pointing users to other platforms such as OnlyFans - do not facilitate sexual encounters. They are merely self-expression, education or, sometimes, direction to take those encounters elsewhere.”

2. Erotic Art / Illustrations

Even illustrations of bodies are often censored…

From Instagram Community Guildeines:

“We know that there are times when people might want to share nude images that are artistic or creative in nature, but for a variety of reasons, we don’t allow nudity on Instagram… Nudity in photos of paintings and sculptures is OK, too.”

Confusing, right??

3. LGBTQIA+ Content

Queer content seems, for some reason, to be flagged as sexual or inappropriate more often than hetero content. We see so many LGBTQIA+ photographers, artists, and influencers talking about their engagement being extremely low and/or their photos being removed for violating guidelines even when - as you’ll see below - they do not violate the guidelines!

4. Female & LGBTQIA+ Sexual Health and Wellness

Pleasure is at the center of sexual health and wellness. Information and products that teach us about our anatomy, our bodily functions, and help us achieve pleasure are solutions to problems women & lgbtqia+ face such as sexual shame, pain and displeasure, unwanted pregnancy, sexually transmitted infections, sexual assault, rape, and bad communication.

If erectile disfunction drugs are sexual health, then so are clitoral stimulating vibrators, dildos and the rest.

The Bloomi also just successfully raised capital through an equity crowdfund🎉! Again, I’ll be talking about this more in a couple of days, and about how/why sextech companies like us struggle to fundraise the “traditional” way but why crowdfunding is better and more values-aligned anyways. Remain tuned.

5. Periods

Periods are not inappropriate nor are they sexual.

Also, period poverty non-profits and lifestyle brands such as my fellow Harvard alum Nadya Okomoto’s It’s August are also wrongfully targeted when their literal sole purpose is to educate and supply period products to folks for whom this information and products is unaccessible… good job, IG.

6. Fat Bodies

I read somewhere that the algorithm is trained to recognize the amount of visible skin, and when a certain amount of skin is visible in the image, it flags it as inappropriate and therefore sexual… This might explain why SO MANY fat models, activists, and just people are wrongfully targeted for censorship due to nudity. HOW DUMB IS THIS?!?! We can do better.

7. Disabled Bodies

Disabled bodies are also commonly wrongfully censored. A quote from this USA Today article captures it best by saying,

Additionally, notions of indecency aren't just gendered, they also align with culture ideas of appropriate appearance — meaning that women of color, disabled women, trans women and fat women are under stricter scrutiny compared to their white, thin and able-bodied peers. The bodies, sexualities and desires that are allowed online, translates itself into the bodies, sexualities and desires that are accepted in "offline" society.

8. Anti-Racist Advocacy

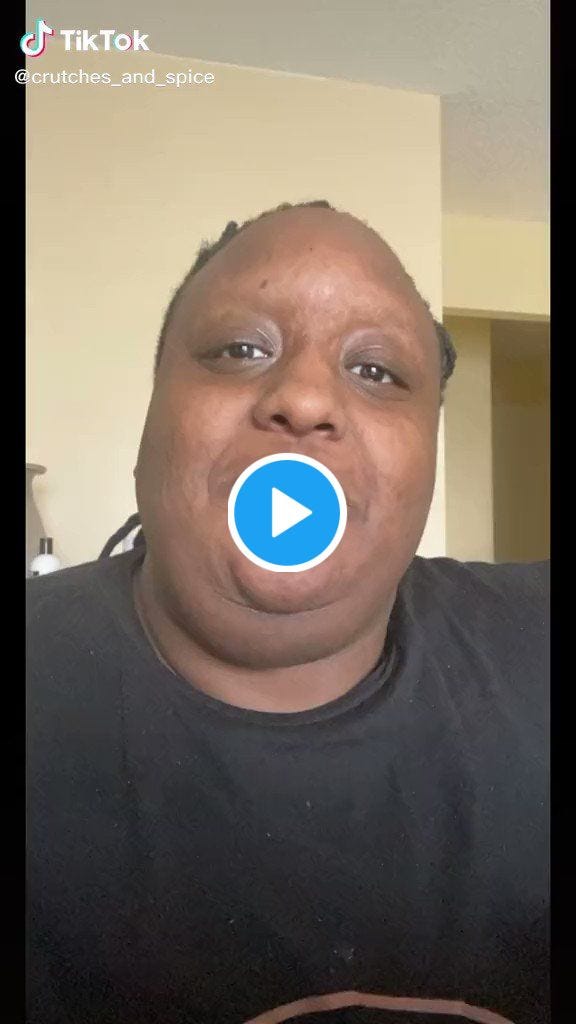

Okay, so this one is a little different from the rest yet still proves the same thesis - that the algorithms are too dumb to know the difference between hate speech in action and anti-racism advocates educating about hate speech or white supremacy.

This is like, a couple of weeks ago after the Georgia massage parlor murders, a bunch of people were complaining that their Tik Tok feeds were vastly different from their usual -

Why? Because it seems Tik Tok’s algorithms are not nuanced enough to differentiate when someone is screaming “white supremacy” because that is what they believe in versus when People of Color are using the platform to talk about racism they’ve experienced.

That’s all I’ve got for today!

Thanks again for being here. To just come full circle on the law enforcement metaphor, communities themselves are the best equipped to keep each other safe full stop. This is why so many community-led alternatives to police are popping up around the country, and why the Biden administration is actively funding more of them… This is what works.

So, join our community-led initiative to make the internet a safer, healthier, SMARTER place for us all:)

So wild that this is happening. Thanks for sharing.